Tomorrow’s Intelligence: AI now and in the Coming Decades

INTRODUCTION

The term artificial intelligence (AI) in the broadest sense refers to the field of study with the aim of simulating the workings of a human brain within a man-made device. Sometimes the term AI is also used to refer to said devices.

What does it mean to ‘simulate human intelligence’? It is important to ask this question because current AI can do many tasks unfathomable to humans, yet are unable to perform other tasks that may seem common sense to us. For example, the computer program AlphaGo by DeepMind, managed to learn the game of Go in a matter of months and mastered it beyond what any human has been capable of. On the other hand, self driving cars are still highly unreliable on roads with poor markings or in severe weather conditions. Thus, it is best to frame the goal of AI in a more precise manner as follows:

To empower machines to perceive their surroundings, learn from data and experience in order to achieve a set goal.

“Turing actually proposed... to consider whether machines could think. Perhaps this could be considered the point where AI morphed into a field of study on its own.”

The first mentions of AI are as old as human civilisation. However, it was only after Alan Turing gave a mathematical formalism to the notion of a computation (1936) that the idea of non-biological intelligence started losing its science fiction flavour. In a paper of a philosophical nature (1950) published towards the end of his life, Turing actually proposed his colleagues to consider whether machines could think. Perhaps this could be considered the point where AI morphed into a field of study on its own.

Since then, the achievements of the field have simultaneously exceeded expectations and created scepticism as well as conspiracy theories. Given the rate at which technology progresses, it is not trivial to formulate reasonable expectations of AI within a given framework of time. In the present text we examine the current state of AI devices as well as the current business outlook on the matter. We also discuss discrepancies between current AI devices and human brains, which could be food for thought towards the goal of AI displayed above.

CHAPTER 1

Neural networks and symbolic AI

Just as a formalism for the notion of computation was essential to start the field of computer science, we need to formalise the notion of a neural network to properly study AI.

A biological brain is effectively a network of cells called neurons, which process information by transmitting electrical pulses between each other. The process of learning has been shown to physically change the brain, for example, by altering the connections between the neurons. The best known way to model this information processing and this altering of the connections as a result of learning is a neural network.

One can picture a neural network as a series of layers of interconnected nodes (or artificial neurons). The first layer is referred to as the input layer and the last layer is referred to as the output layer. The number of layers as well as the number of neurons in each layer determine the architecture of the network. Each neuron takes as input the output of the previous layer (unless it is a neuron of the first layer) and outputs data which can be used by the neuron of a further layer as input. The output of each neuron for a given input uniquely depends on a series of numerical parameters called weights and biases as well as a choice of activation functions. The best way to understand the significance of these mathematical objects without going into technical details is to look at how a neural networks learn or, in the current jargon, it is trained.

Suppose we want to determine whether a photo has a cat or a dog in it by using a neural network. We have a large set of images referred to as the training data for which we know whether the subject portrayed is a dog or a cat. The image is inputted to the network in the form of a bit-sequence, and the output is a number between 0 and 1 indicating the probability that the image is a cat. Suppose we have some informed guess which suggests us what network architecture to pick and which activation functions to use. We then pick a random choice for the weights and biases of each neuron. Note that in this situation, the output of the neural network is completely determined by the input, since the action of the neurons relies solely on the choice of activation functions, weights and biases.

“The best way to understand the significance of these mathematical objects without going into technical details is to look at how a neural networks learn or, in the current jargon, it is trained.”

However, given that the numerical values have been chosen at random, we cannot guarantee the output to be accurate. A first measure of the accuracy can be calculated using a loss function. The loss function gives an average value of the discrepancy between the output of the network on the training data and the ‘correct value’. Using algorithms based on probability and elementary analisys (such as stochastic gradient descent), we can iteratively update the weights and biases so as to minimise the loss function. This procedure of iteratively updating the numerical parameters is referred to as backwards propagation. Once the weights and biases are adjusted so as to minimise the loss function, the neural network is said to be trained and deemed ready to be used on images outside of the training set.

After the learning procedure described above, the neural network could still be a far cry from being accurate. A reason for this could be a bias in the training data. In the above example, say all the cat photos in the training data were ginger, then a priori, the neural network could struggle if given a black cat on input.

The above learning procedure is one of the simplest used nowadays. There are further learning procedures where the architecture itself of the network can be changed by adding/removing neurons or layers.

The method of gradient descent finds a local minimum of the loss function. This is not necessarily a global minimum, and may not be unique. In fact, a local minimum is not even guaranteed to exist. There are several more advanced numerical methods to deal with these, each with its own pros and cons. For example, if one expects a loss function with a complicated geometry, there are techniques to ‘smoothen’ it so as to make gradient descent more effective.

A major criticism of AI today is that it is a tool that works but nobody knows why it works. The term explainability has been coined to refer to the field of study with the aim of investigating and interpreting how AI systems make decisions. This is crucial both for ethical issues such as trust and transparency, as well as the improvement of current AI systems.

CHAPTER 2

Current hardware and software solutions

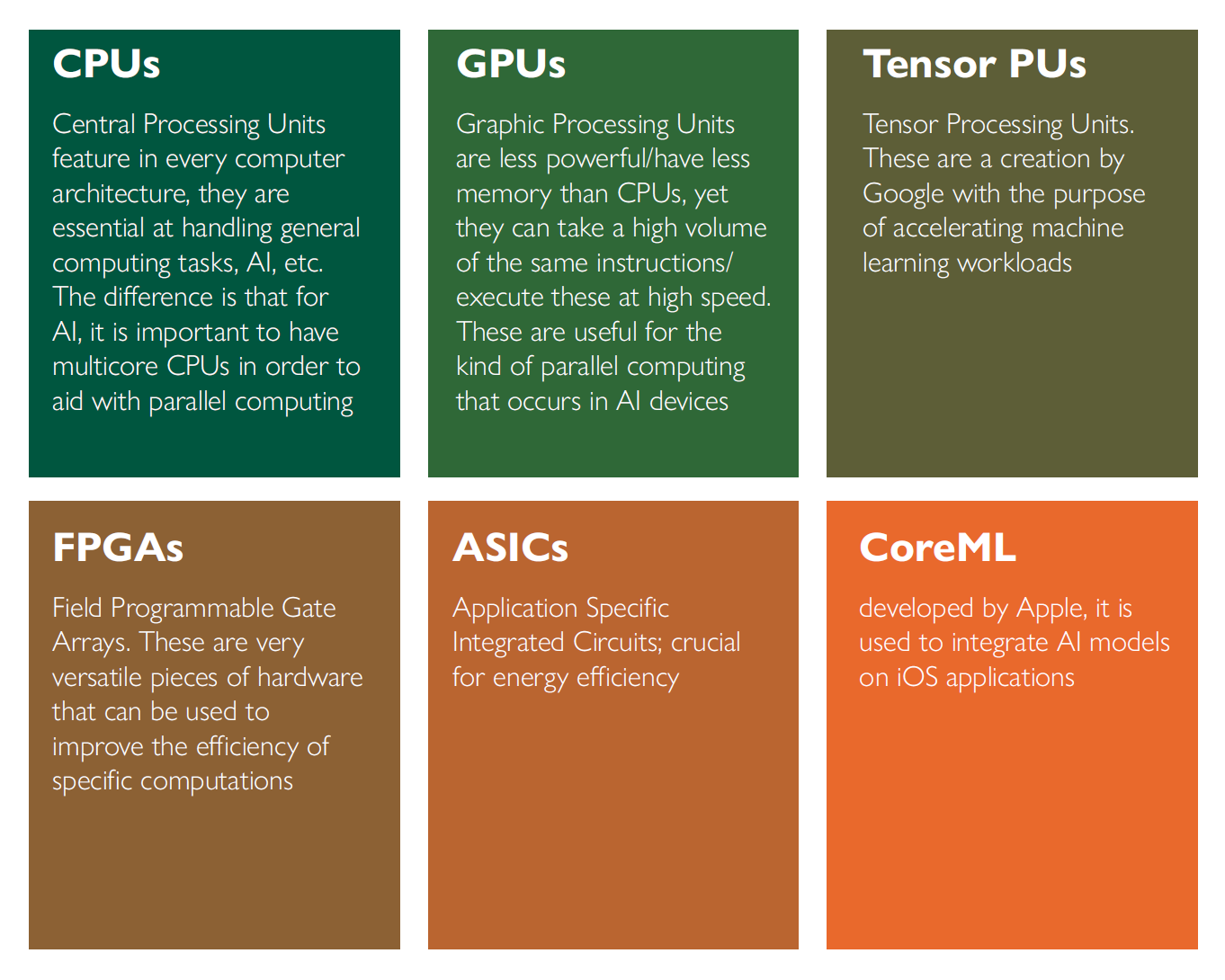

A complex neural network can require a substantial amount of resource and computational power during both the training and the inference processes. To run these it is important to have software that is capable of handling large data sets, as well as hardware enabled to run for the long training and inference times while performing parallel processes. Let us summarise the current software and hardware solutions most used in today’s AI training and implementations.

Software solutions

Here we include both software aimed at building AI models as well as tools for optimisation and deployment.

Hardware solutions

In order to optimise the handling of enormous data sets as well as parallel computations, the architecture of an AI device has the following features, some of which are borrowed from ‘ordinary’ computer architecture.

In addition to the above, it is needless to say that a sufficiently large rapidly accessible memory is necessary to handle the training data set as well as the intermediate results during the training or the inference procedures. Since training session can be intense, stable power supplies are required, as well as efficient cooling solutions to avoid overheating (and hence losing efficiency) of the hardware.

Limitations of the current technology

In spite of the impressive progress in software solutions, most models (including successful ones the likes of ChatGPT) require an impressive amount of computational resource as well as memory for intermediate steps, both during the training and inference processes. The training process itself relies on a huge data set. Acquiring, maintaining, labelling and handling these are time consuming, expensive and require a lot of memory as well. Furthermore, AI models trained on biased data are unreliable. This can lead to ethical concerns, so it is still a very ‘human’ role to spot any bias in the acquired data. On a slightly different note, another limitation of the current AI software solutions is their low latency response. Chatbots or video synthesis software may still require a significant amount of time to react to a user’s stimulus.

The current hardware limitations are rather intuitive from the above software discussion. The storage and computational power requirements raise serious sustainability concerns. In addition, the production and disposal of high-end hardware components involve the use of rare earth metals and other non-renewable resources, contributing to environmental degradation. Cost concerns also arise when one considers scaling up devices, for this would require significant investment in more powerful CPUs, GPUs, etc. as well as adequate infrastructure to support parallel computing at a large scale. Cost issues also prevent smaller companies from arising and hence competing with the wealthier establishment.

Thus, it is reasonable to expect the current leaders in AI innovation (Google, OpenAI, Microsoft, NVIDIA, Amazon, Meta) to dominate at least for the near future. On the other hand, it has been predicted by Demis Hassabis (CEO of DeepMind) that AI synthesised drugs should be available within the next year or two. This could mean that pharmaceutical companies also have a chance to become leading AI innovators within a more specific context.

CHAPTER 3

Market dynamics: current and future landscape

The current AI market is very active and rapidly increasing, due to both investment and acquisition strategies. For example, larger companies are acquiring small AI startups to integrate more specific skills within their production team. It is also reassuring that companies are aware of the need to collaborate rather than compete against each other in order to be successful and responsible AI innovators. Microsoft and OpenAI have, for example, established a long term partnership with the aim of accelerating their research by combining their respective expertise.

The hope is also that encouraging partnerships would also encourage the democratisation of AI. There is also a rise in partnerships between AI companies and industry-specific firms to co-develop tailored solutions, enhancing the applicability and reach of Generative AI technologies. For example, Google and PYC have partnered in order to foster the development of new precision medicine techniques. This was most likely after Google’s success with AlphaFold, an AI system capable of predicting the 3D structure of proteins from the sequence of amino-acids.

The following are popular monetisation strategies used by AI companies:

Subscription models, such as that of OpenAI with ChatGPT 4

API Licensing are used by AI system providers to allow businesses to integrate AI in their own applications. These are a constant revenue for the AI system provider

Custom solutions for business are also a lucrative source

Let us examine the last two points, as they allow businesses to exploit AI capabilities without the need to develop any themselves. Common bespoke custom solutions include plug-ins for popular software so that the customer can accelerate their workflow by adding some AI functionality. Other services for businesses include providing AI toolkits with pre-trained models and deployment frameworks so as to reduce efforts and costs related to development. Of course, there are also platforms where the business requiring a service can build, train and develop their own AI model by exploiting a ready made AI infrastructure.

“There is also a rise in partnerships between AI companies and industry-specific firms to co-develop tailored solutions, enhancing the applicability and reach of Generative AI technologies.”

In the context of bespoke AI solutions, it is also important to mention the research into creating of smaller language models. Roughly speaking, these can be thought of less complicated neural networks for language models, that work in a more specific context rather than being a general purpose one like ChatGPT. These tend to be more efficient with more data than larger models do with less data. In addition to being less problematic with explainability and, of course, being available to run on smaller devices, smaller language models help democratise AI by running in less wealthy institutions.

It is interesting to note that whilst AI is often accused of ‘stealing jobs’ from humans, it turns out that AI itself can be an incentive for adjacent business models to emerge. Here are a few examples:

Data annotation services: high-quality data is crucial for training AI models. Businesses offering data annotation and curation services are in high demand to support AI development.

AI ethics and compliance consulting services: as AI adoption grows, so does the need for ensuring ethical use and compliance with regulations. Consulting firms specializing in AI ethics and compliance are emerging to fill this gap.

AI powered content creation: Companies are leveraging generative AI for content creation in areas such as marketing, design, and entertainment. This includes AIgenerated text, images, and videos tailored to specific business needs.

CHAPTER 4

What is the next step in AI innovation?

In the previous section we described the potential picture of the AI market in the near future. For a further outlook, this depends a lot on the development of the technology (both hardware and software) that will address the current limitations of AI discussed in Section 3. The main innovations that AI currently needs are mostly related to sustainability and efficiency. The development of specialized AI hardware, such as tensor processing units (TPUs) and neuromorphic chips, directly address this issue. Companies like Google, NVIDIA, and Intel are leading this effort. In addition, advances in edge computing will enable Generative AI models to run efficiently on local devices, reducing latency and enhancing privacy. This is particularly important as the cost of cloud computing will increase as the available hardware decreases. This is to be expected, as GPUs are fabricated with some rare metals and thus, a push towards democratising AI will probably be a hot topic for years to come.

On the software side, advances in self-supervised learning will reduce the dependency on labelled data, allowing AI models to learn from vast amounts of unstructured data. This will enhance the model’s understanding and generalization capabilities, making them more robust and applicable to a wider range of tasks. There is also an effort being put for improving methods for incorporating human feedback into the training process. This will lead to more aligned and user-centric AI systems. The aim of this approach is to fine-tune models based on human preferences and ethical considerations.

This could be the first step towards a more interactive form of AI. In addition, an AI device that accepts feedback from humans could be seen as more ‘trustworthy’, and such machines could be incredibly useful in education.

“…investors are very keen on quantum computation in spite of its slow progress, because the potentials it promises, if coupled with AI are enormous.”

It is important to note that any significant development in the field of quantum computing could entirely change the direction of AI technologies. Indeed, quantum computers can process vast amounts of data simultaneously, potentially speeding up machine learning algorithms. This can lead to faster training of models, especially in deep learning. In addition, quantum algorithms can outperform classical ones on many tasks, including those involved in the training and inference on neural networks. Speaking of which, research into quantum neural networks has suggested how these could outperform their classical counterpart.

The advent of quantum technologies would also mean safer encryption, a necessity when handling enormous amount of data required for training a neural network. Overall, quantum computing promises to enhance machine learning, improve optimization, and enable new types of AI applications, potentially leading to breakthroughs that are not feasible with classical computers alone. However, it is not sure when quantum computers will be widely available, let alone one suitable to train an AI model. While some quantum computers have found practical use in healthcare (e.g., IBM products in few Cleveland clinics), it is expected that these computing devices will not be widely available within the next 40-50 years. Yet, it seems that investors are very keen on quantum computation despite its slow progress, because the potentials it promises, if coupled with AI are enormous. Previously, we mentioned some potential leaders of the next stage of AI innovation. Here is how they might contribute to the development.

Notably, Engineered Arts, a company at the origin of a highly interactive humanoid robot (Ameca) could be the start of a new tech trend. Althoughit is unlikely these humanoid robots will be used in daily life in the next few years, such devices could be useful in the future for tasks requiring low skills but infinite patience.

CHAPTER 5

Toward general artificial intelligence

There is a general trend in AI devices today: they tend to perform beyond human capabilities on a restricted set of tasks, but this is not the case if we broaden the range of cognitive tasks. For example, AlphaGo is incredible at playing the game of Go, but that’s about it. Arguably, chatbots such as ChatGPT have a broader range of skills, but ultimately all they do is mimic human language with a poor problem solving side. By general artificial intelligence we tend to mean a form of AI at least as capable as humans across a widerange of tasks. Most scientists do not quite agree what this ‘range of tasks’ should include,and the philosophical debate as to what does it mean for a machine to be as intelligent as humans has many interesting facets. However, we are certain about one thing: the road to general AI is still long and the timeline is uncertain.

Before going into a philosophical discussion, let us look at a more pragmatic side of the roadmap to general AI. In the previous sections, we have already described the need for more efficiency in computation, memory and energy. Quantum computing could have a very strong impact on this front although, as we have stressed, it is unlikely that such impact will be seen within the next 5 years.

“…neuromorphic devices have an ‘adaptive’ learning style which closely reminds the neuroplasticity of a human brain.”

Another approach to AI that is more hardware based is that of neuromorphic computing. Roughly speaking, this ‘paradigm’ of AI aims to construct a physical brain, where the neurons are neuromorphic chips. It differs from the neural network approach in many ways, the main one being that neural networks are an abstract model, whereas in neuromorphic computing we are talking about a physical brain, so the focus is on the hardware. The main remarkable think to note about neuromorphic hardware is that it is very energy efficient, perhaps because the different neurons communicate in a similar way to biological neurons. Also, while neural networks require new data to be retrained, neuromorphic devices have an ‘adaptive’ learning style which closely reminds the neuroplasticity of a human brain. One of the main advantages to be enjoyed with such devices is the low latency, in addition to providing very high parallel computing power for a much lower energy cost.

There are still, however, many challenges that prevent neuromorphic computing from taking off. For example, the manufacturing process is very specialised so the industry is not ready to meet a high demand for such devices. The software and hardware are not standardised yet, and while neuromorphic learning could be more efficient than that of neural networks, how the training works is still to be understood. In summary, neuromorphic computing could lead the way to a new form of AI, but it is still at its infancy and it is too early to say if it will pave the way to general AI. Thus far, IBM and Intel have made the biggest contributions to the field.

On the software side, researchers have mostly focused on trying to make the training process of neural networks more efficient. Trying to reduce the dependence on labelled datais one aspect of this. There is also some effort into trying to include symbolic reasoning (more natural to humans) within machine learning as in so far, most of the reasoning in a neural network is rather statistical and this is what compromises the transparency and trust of current AI models.

Given the costs of AI research, we expect the market on the way to general AI to be lead by the usual big tech companies we keep mentioning in this text (Google, Microsoft, NVIDIA, Meta, Amazon, IBM etc.). Startups will keep showing up but the only way they can continue their mission is being acquired by bigger companies or come into some enormous investment. Should AI find some highly meaningful application in some specific field of science, then we can expect other big companies to steal a part of the stage.

“Demis Hassabis, for example, suggested studying the Universe at the Planck scale using AI.”

As mentioned already in this text, AI has proven to be successful in the medical front, we can expect big pharma companies to emerge on the AI research stage. More in general, the main area where AI is overpowering human capacity is when it comes to statistical reasoning, so it would be interesting to see what other scientific progress can be motivated and lead by AI models. Demis Hassabis, for example, suggested studying the Universe at the Planck scale using AI. Physicists have been using machine learning techniques to handle data since the 1990s, and since 2001, CERN has partnered with many leading Universities and AI companies to engage in AI research. It would be interesting to see if this collaboration will pave the way to realise Hassabis’ vision.

Let us now take on a more philosophical turn: what can philosophy tell us about the pathway to general AI?

Mustafa Suleyman, CEO of Microsoft AI and cofounder of DeepMind, suggests viewing AI as a ‘digital species’ rather than a mere useful tool. With this framework, we can start thinking about the discrepancy between artificial and biological intelligence in terms of evolution. In fact, the human brain is a product of millions of years of evolution by natural selection, whereas AI, as rapidly as it has evolved, has been around for just under a century.

The ability to generalise one’s learnt experience to perform a wide variety of tasks is arguably a product of evolution and, as remarked above, a limitation in current AI machines, which only perform on very specialised tasks. It can also be argued that human intelligence is significantly influenced by social interaction. Not only society encourages cultural accumulation and cooperation, but social interactions physically change the brain through neuroplasticity. AI devices still lack of this opportunity to interact with other member of its species.

Another product of evolution that highly influences the brain and AI enthusiasts might want to consider is the need for humans (and other animals) to sleep. The benefits of good sleeping habits on animals’ cognitive abilities are well known, though there are still wide gaps in our understanding of certain mechanisms. We also have quite a few witnesses to the benefits of sleep on creativity: Mendeleev, for example, claimed to have come up with the periodic table in his sleep. Otto Loewi, claims to have made his breakthrough on neurotransmission after waking up from a snooze and writing down his dream. The fact is, in very loose terms, that sleep fosters the formation of new neural paths and connections that seem impossible when awake. The question is: can we simulate this radical change of neural connections in AI?

One can argue that what drives natural selection is the innate desire of individuals to transmit their genes to the next generation of their species, and this does not come without the awareness of one’s mortality. Humans in particular are still somewhat obsessed with mortality and feel the need to ‘survive’ in some afterlife. This is independent of religious faith, as it is the motor behind the need to procreate and to produce something everlasting, whether that is art, music, literature etc. So arguably, an incentive to human’s original creativity is the awareness of mortality and the need to live through one’s creation even after death. Would this really be necessary for AI to gain original creative power? As far as we are aware, today’s AI can create a ‘near perfect’ violin by learning the architecture of violins that humans consider to be flawless... which is all very good, until you ask the question whether AI could actually ‘invent’ a musical instrument out of its own desire.

In conclusion, we have argued that a big gap between artificial and biological intelligence is represented by the gifts of evolution. One could ask whether the only path towards general AI is that of allowing AI to ‘procreate’ on its own and follow the laws of natural selection. However, the day AI start building other AI will be a day humans will feel very sorry for themselves. Rather than asking what’s the path towards general AI, the appropriate question is probably ‘what do we want our AI to do’. Even today’s AI, with all its flaws, is already changing the world in both positive and negative ways, and the ethical debates will become ever more important as we progress into new stages of the information era. In such times it is always good to remind oneself that technology cannot make the world a better place, but humans can.

Symbolic AI

This is colloquially referred to as ‘good old AI’, in that the first attempts at constructing artificial intelligence were based on this model. In symbolic AI the machine is programmed to reason using axioms and inference rules. The main advantage over AI models based on neural networks is thus immediate: there’s no need for training data. Symbolic AI is also more transparent, as symbolic reasoning is a lot more akin to human reasoning. It is also more adaptable to different domains in the sense of ‘generalising skills’.

“In symbolic AI the machine is programmed to reason using axioms and inference rules. The main advantage over AI models based on neural networks is thus immediate: there’s no need for training data.”

The main downsides of a purely symbolic AI models is that they are programmed by hand. Effectively, the training requires human power to feed the knowledge and inference rules to the machine. This means that symbolic AI cannot be used in fields where knowledge is scarce. Scalability is also a serious issue.

Medical diagnoses are a field where symbolic AI has been very successful. Siri is also based on symbolic AI and some aspects of self driving cars use symbolic reasoning (for example when reading road signs, there is a very clear human reasoning the likes of ‘stop if you see a STOP sign’).

How to combine symbolic AI with neural networks?

Most people believe that an important step to improving current AI models (let alone heading towards general AI) is to integrate neural networks with symbolic AI; that is, statistical and logical reasoning respectively. By the term neurosymbolic AI we tend to mean hybrid AI models combining symbolic AI and neural networks. This field of research is not that new, as many successful AI models are arguably neurosymbolic. One of the latest plugins of ChatGPT, for example, uses WolframAlpha whenever a more mathematical reasoning is required. AlphaGo as well is also a successful example of neurosymbolic reasoning. Another interesting one is Neural Theorem Prover, where the neural network is generated by the symbolic rules.

Nevertheless, we are still far from understanding the best systematic way to combine symbolic AI with neural networks. All of the systems mentioned above still rely on extortionate amounts of data for their training and, in the case of chatbots, are still affected by hallucinations (that is, ‘inventing’ knowledge such as books that were never written). It is also important to note that while more symbolic reasoning could mean less reliance on data, this also requires more computational power.

There does not seem to be much investment into neurosymbolic AI research; however, it is believed to be our greatest hope to fixing hallucination problems in chatbots without having to do brute force fine tuning. Healthcare will certainly benefit from efficient neurosymbolic AI models, as these can be used for diagnoses (which require thorough logical/inference reasoning) and drug repurposing.

IBM and Google are amongst the big names involved in neurosymbolic AI research. Arguably, we still do not understand how the human brain combines statistical and logical reasoning, so knowledge in cognitive neuroscience could be useful in this context.